Every musician doing computer music, regardless of whether he or she is a beginner or a seasoned musician, is confronted with this problem. Audio delays, erratic MIDI management, crackles, and wild pops. Yes, latency is a pain. Fortunately for everybody involved in making music with a computer, there are several tricks that make the coexistence with latency less unpleasant.

OK, but…what is it, anyway?

First of all, it’s important to remember what latency is and why we are forced to deal with it. Latency, or rather latency time, is the delay between an action (pressing a key on a keyboard, for example) and the actual response (the sound arriving to your ears).

|

When it comes to computer music, there are two types of latency: Hardware and software latency. It’s also good to know that both of them can accumulate and that the latency requirements are not the same for recording and mixing. It goes without saying that, when recording audio or MIDI, the goal is to get the lowest latency possible, measured in milliseconds (ms). If you press a key on your keyboard and it arrives a second later, you will have a hard time trying to play in time.

Oversimplifying things, you could say that hardware latency is the time it takes the computer and audio interface to process data and send it to the software sequencer (DAW), and then translate it again into audio that’s output through speakers or headphones. If you add to that a data “capturing” hardware, like a MIDI/USB keyboard, for instance, latency increases slightly, due to the necessary communication between the device and the computer.

|

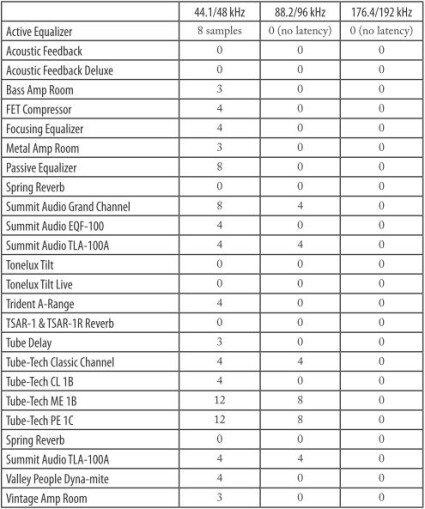

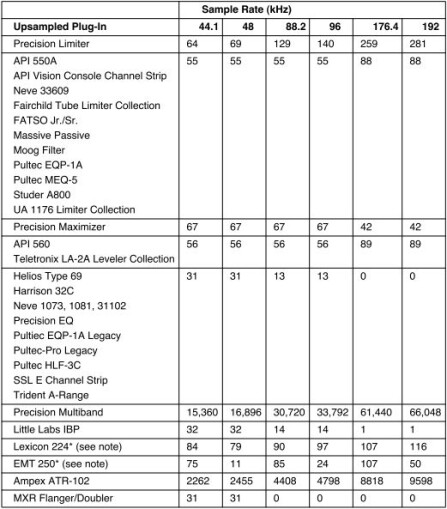

Software latency arises from the use of resource-hungry plug-ins in the DAW in which you’re recording or playing. In fact, every time you insert a virtual processor, you add a software layer that requires the computer to do some additional computing. And to complicate things even further, not all plug-ins are the same in this sense and some of them are really resource-intensive in terms of milliseconds.

For example, here you have the data provided by Softube concerning the latency introduced by each plug-in.

The same from UAD:

Or even Voxengo, who provide the information directly on the plug-in’s interface:

Typically, the amount of resources needed is measured in samples. The more samples a plug-in “requires, ” the more the latency there is.

Buffer at will

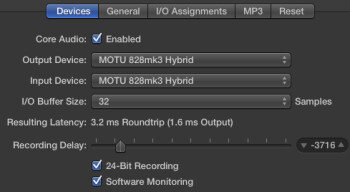

As you just saw, converting an audio source into digital data to reconvert it into an analog signal again requires time and hardware resources. To find the best compromise between a very low latency time, at the expense of lots of computer resources, and a very high latency that is easily manageable by the hardware but not very “playable” (you hear a distinct delay between your playing and the sound itself), you have no choice but to try different settings fiddling with the drivers of the audio interface. The most critical setting is the size of the memory buffer, which is the place where the data will be temporarily stored until it is actually processed. If the buffer is small, it may saturate quickly, making you lose data (generally characterized by audio crackling), but the latency time will be shorter. At the same time, if the latency time is shorter, the computer will need to have more processing resources available.

What’s better quick but jerky or stable but drowsy?

In order to illustrate the relation between the size of the buffer and the induced latency time, here you have two examples of extreme settings:

With a 32-sample buffer (see screenshot above), the global latency time is 3.2 ms. Result: You won’t perceive any delay, but the system will use more resources, which could easily lead to crackles.

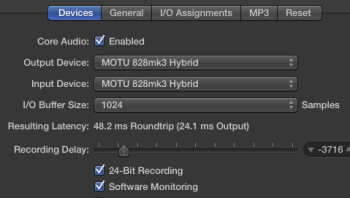

In this second example, the buffer is set to 1024 samples and the system is more comfortable. However, the latency time has risen to 48.2 ms! So, playing with a virtual instrument is almost impossible.

As you can see, ideally, you should find a buffer size that provides the best compromise between execution speed, processor load and user comfort.

In the next installment, we’ll see some methods to find the best settings and, especially, in which cases you should use them.