The goal of this article is to help develop knowledge of basic acoustic principles. This in turn will help you to understand, and eventually master, the basic techniques of sound engineering and recording. Each section has a theme that is first defined in technical terms and then commented on in practical terms in respect to audio equipment.

The goal of this article is to help develop knowledge of basic acoustic principles. This in turn will help you to understand, and eventually master, the basic techniques of sound engineering and recording. Each section has a theme that is first defined in technical terms and then commented on in practical terms in respect to audio equipment.

Definition of timbre

Timbre (pronounced /tam-ber’/) is a sound’s identity. This identity depends on the physical characteristics of the sound’s medium (the matter or substance that supports the sound). Let’s take an A at 440 Hertz produced at 60 decibels: we can immediately tell if the sound was emitted from a violin, saxophone, or piano. Yet, even though the instrument is different, it’s the same note and the same amplitude. The difference is in the sound production: string, air column, etc.. Plus, the sound isn’t generated by the same “tool”: a bow for violin strings, a reed and an air column for the sax, and felt covered hammers that strike the piano strings. It’s the different physical characteristics of the medium and the « tool » that determine the characteristic sound waves in each case. Later we will also see how a sound chamber adds another dimension to this definition.

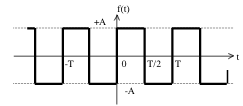

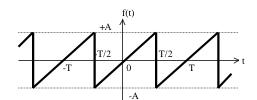

Waveform

|

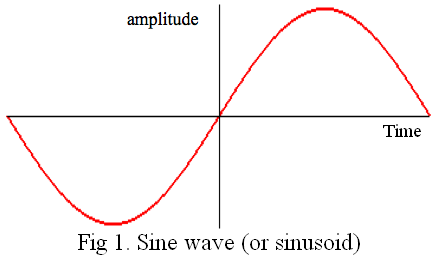

The most basic waveform is a sine wave (sinusoid) (fig. 1). It could be considered the atom of sound. Pure sinusoidal sounds are rare ( tuning forks, drinking glasses being rubbed) and were considered to have strange powers over human behavior at one time. Most sounds that surround us are of a more complex nature.

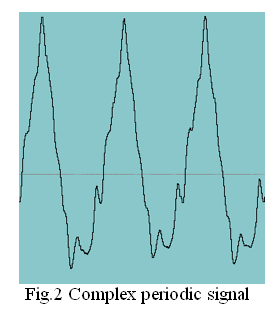

This means that inside a sound, that we perceive as being unique, there is a superposition of many sine waves that have, in a way, fused together to become one sound. It’s the nature of this superposition itself that determines the resulting waveform (fig. 2) and that is responsible for its timbre. This is called a spectrum.

|

Fig 2. square wave |

|

Fig. 3 sawtooth wave (or saw wave) |

|

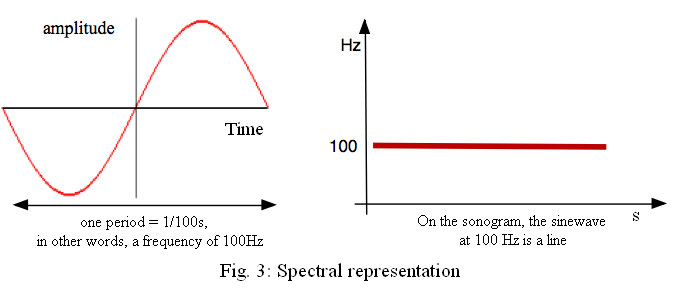

Spectral representation

There are many ways of graphically representing sound. For instructional purposes we have chosen to use a spectrogram for its clarity and simplicity.

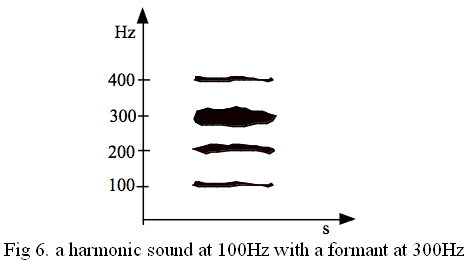

Horizontally: time in seconds. vertically: frequency in Hertz. A sine wave (sinusoid) at 100 Hertz is represented by a horizontal line at a height corresponding to 100. A harmonic sound at 100 Hertz is represented by superimposed lines corresponding to sine waves of 100, 200, 300: n x 100 Hertz. The length of the lines represent the length of the sound.

|

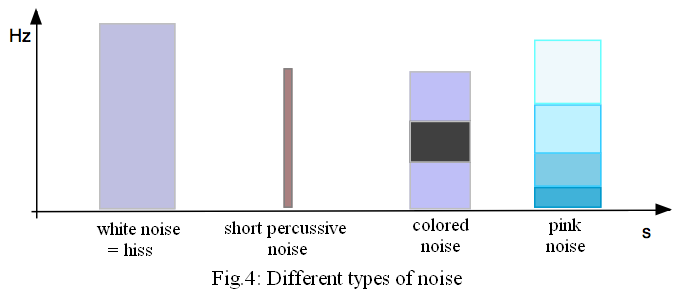

Noise

Let’s imagine a case where all sine wave frequencies that are perceptible to the human ear (from 20 Hertz to 20 kHertz) and having the same amplitude, are “mixed” into one sound signal. We get what is called “white noise”, or in other words “hiss”. If the white noise is very short we would perceive it to be a kind of short percussive sound. Consonants belong to this category, in the same way that a sound medium that receives the attack of the “tool” which “kick-starts” it, produces as noise. This noise corresponds to the time it takes for the sound wave to stabilize and take its final form. The “rubbing” of a bow on a string is similar to a hissing sound, while a hammer hitting a piano string is similar to a percussive sound. These notions will be dealt with in greater depth when we get to envelopes and transients. In the case where a series of noise frequencies is contained between certain limits we will refer to them as noise bands.

If a zone is particularly swollen in energy, then we can speak about colored noise around that zone. Pink noise is white noise with a power density that decreases by 3 dB per octave.

|

Harmonic Sounds

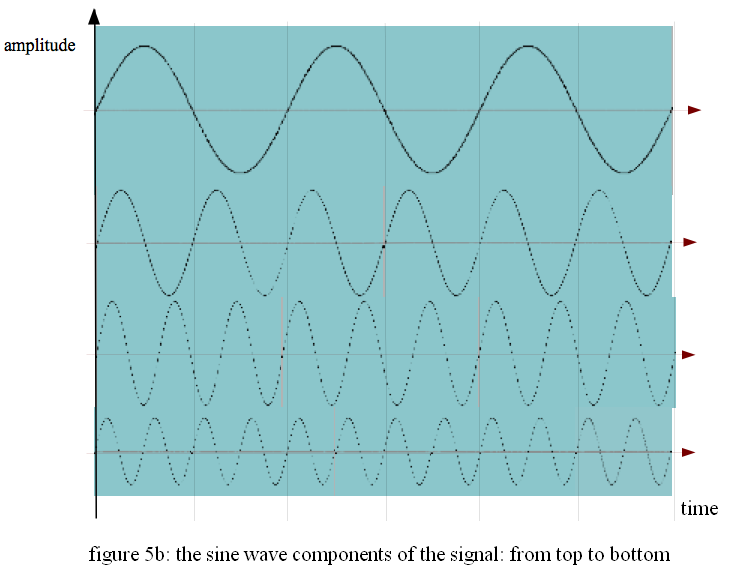

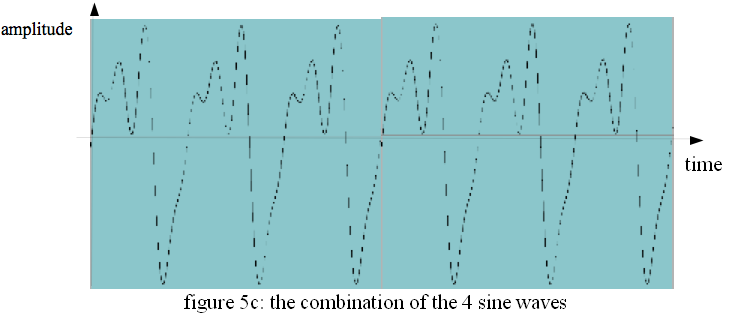

Having already highlighted the superimposed or complex aspect of sound, we are now going to focus on a specific category of frequencies in a sound spectrum: harmonics. A harmonic sound is a sound which contains sine waves that obey the mathematical law called the Fourier series. This law translates as follows: A complex periodic signal is made up of a certain number of component frequencies that are integers of the fundamental frequency.

An example of a harmonic sound: a sound at 100 Hertz in which the component waves are 100; 200; 300 ; 400 ; 500 ; 600 Hertz. The perceived pitch is the lowest frequency: 100 Hertz. The following component waves (2 × 100, 3 × 100, 4 × 100, etc.) are calculated on integers and are called harmonics. The lowest frequency, on which they are based, is called the fundamental. The number, or “rank”, of a harmonic is the integer by which the fundamental is multiplied. For example the 3rd harmonic would be the one at 300 Hz. fig.5

|

|

|

The pitch of a harmonic sound is easily perceptible to the ear, and these sounds usually have an “in tune” quality about them. That’s why melodic musical instruments are designed with the goal of producing harmonic spectrums.

Noises, like those we referred to earlier, are aperiodic signals. They are characteristic of percussion instruments for example.

The distribution of energy in the spectrum

|

Regions of relatively great intensity in a sound spectrum are called formants. In the case of a band of consecutive frequencies it is referred to as a formant zone between x and y Hertz. This distribution of energy plays an important role in the perception of timbre, as do the number of components in the spectrum, their distribution, and its regularity or non regularity .

Some graphic representation examples

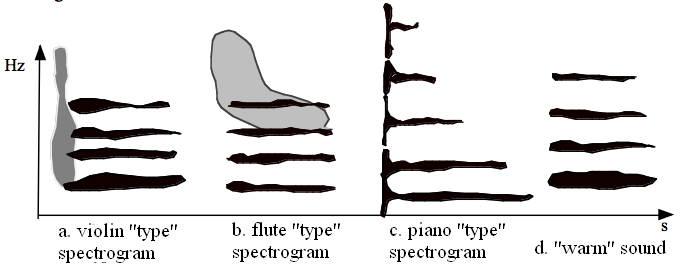

|

a- violin: a hiss noise at the attack, harmonic spectrum

b- flute: harmonic spectrum

c- piano: noise of the hammer attack, percussive sound and spectrum

not quite regular in its harmonics.

d- warm sound: few harmonics but regular distribution of the energy

from low to high

e- piercing sound: harmonic sound with a lot of intensity in the highs

f- Hollow sound: few harmonics in the mids

g- nasal sound: weak lows, intense mids, weak highs

h- non harmonic sound: like a non-tuned bell

I- square signal, odd harmonics: like a clarinet sound

|

EQing on a console

It’s the EQ section of a console that will allow us to tweak or correct timbre. Depending on the model, the EQ section is more or less sophisticated and offers different possibilities of adjustment. We won’t be dealing with simple high/low EQ knobs or switches that you can find on hi-fi amplifiers or entry level mixers which are only meant to adapt a sound to a specific listening area. We’re more concerned with the EQ controls that are found on small modern digital models or part of most major recording software. We must keep in mind that EQ is mainly used for one reason…to correct, and not in the hope of improving the recorded signal: you can never turn a mediocre recorded sound (due to bad placement of the mic or even the quality of the mic itself) into a great sound by just using EQ. Equalizers split the audible frequency range( 20 Hertz to 20 kHertz…) into many sub-ranges. Thus one generally talks about highs, medium highs, low mids, and lows. The first thing to do, then, before tweaking any knobs, is to determine in which frequency range the problem lies, then after that, the nature of the problem. Is it due to too much coloring that wasn’t detected during the recording process, a parasite due to the environment, or a masked effect due to the presence of other instruments…

What does it look like?

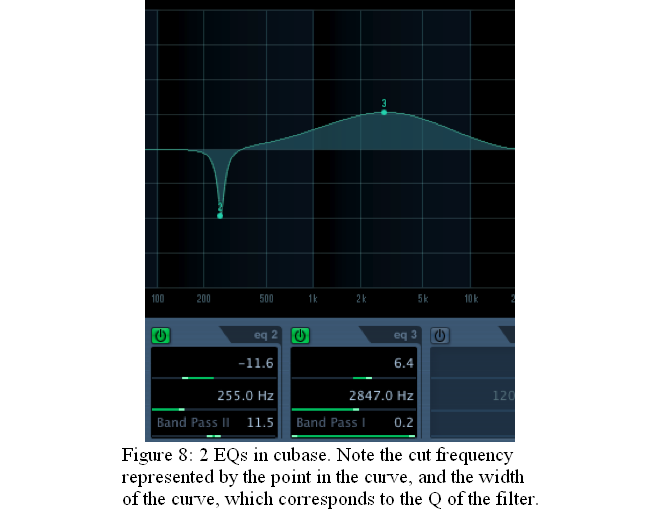

Equalizers are…harmonic and partial filters. Their specificity lies in the fact that they not only can get rid of component frequencies, but that they can also amplify chosen frequency zones. Of course, if there isn’t anything in the signal in that range, only hiss will be added! Good EQ sections generally have 4 bands. Each offers at least 2 controls: frequency adjustment and gain. These are called semi-parametric. There’s often a third setting called the bandwidth or “Q” which has the purpose of enlarging or tightening the frequency range (bandwidth) of the filter. When this 3rd control is present, the Equalizer is then called a parametric equalizer. Frequency adjustment will be tweakable between the upper and lower limits of the sub-range of the filter (with software these limits no longer exist!)

The gain knob defines, in dB, how much the filter will effect the chosen frequency. As we can see here in fig. 8, borrowed from cubase, this gain can be positive or negative. We can also see that the curve of the bandwidth can be wider (hump shape) or narrower (peak shape). This shape corresponds to the bandwidth which is adjusted by the Q setting.

|

How to modify timbre

You must always keep in mind that all EQing on an instrument will be destructive with respect to the recorded sound, just as the latter is also, in many cases, an imperfect copy, of the original. So one must be careful! Before touching anything, think about what you want to accomplish with EQing: I want a “warmer” sound, I want to cut the bass, I want my instrument to stand out in the mix, I want to get rid of that annoying resonance that came from the studio… The spreadsheet below is offered to you as a kind of “quick guide” chart. It will serve as a check-list that will enable you to control and master your timbre EQing. Don’t forget, however, to listen: your ears are the ultimate judge.

|

type of EQing

|

your goal

|

Action

|

useful comments

|

| adjust the timbre of an instrument recorded by a mic. | change the timbre of an instrument that has too much or not enough highs or lows. |

Determine what frequency band needs to be changed: 1) put the gain to +12 db and then, turn the knob until you hear the zone where the signal increases the most. You have found the frequency to cut! 2) lower the gain to 0 dB, then lower the gain gradually until you get what you want. 3) Compare, by bypassing, the original signal with the new one. |

Make the adjustments while the chosen instrument is in solo mode. Then, un-mute the other instruments to evaluate the new sound in the mix. Keep a large bandwidth (hump shape) and narrow it if necessary. |

| Make an instrument stand out or blend into the mix. |

1)as above, determine what frequency band needs to be changed. Maybe you’ll need two filters, if the instrument has a large range. 2) tighten the Q as much as possible around the limits of the instrument. 3) Slightly raise the gain (not more than 3 to 5 dB!) of the filter. |

This doesn’t always work because other instruments could be in the same frequency range. So boosting the soloist boosts them up too. In this case we have to fall back on a multi-band compressor. |

|

| Bad quality of a spoken voice recorded by a mic. | correct “problems” on certain consonants. | It’s probably the “pa”, “da” and similar types of syllables that cause problems: the solution is at the bottom end of the frequency spectrum, and more specifically in the noise of the attack. Certain consoles feature a fixed high-pass filter whose purpose is precisely to try and limit this problem. Choose the “low” EQ, and reduce the gain by 2 to 3 dB. Use “shelving” if it isn’t already the default type of filtering. If there’s no shelving, try widening the bandwidth (Q) as much as possible. | Again, it doesn’t always work. Bit it’s still easier on a spoken voice than on a singing voice. If, in spite of your efforts, the problem persists, you’ll have to use a compressor. |

| Unwanted sound during playback or in the recording. | delete a parasite or a noise in the background linked to the place it was recorded. | Find the parasite frequency, just like you did above, if it’s a cable or electricity problem, it’ll be around 50Hz or one of it’s harmonics. As soon as the frequency has been found, narrow the Q as much as possible and bring down the gain…as much as it takes for the signal not to be a nuisance anymore. |

You should have noticed it during the recording! All we can do now is “make the best of what we can”! The EQing applied will be more efficient if the frequency is accurately targeted. But…everything else in the same range will disappear with that parasite! |