Scott Gershin is one of the top sound designers working today. He's been creating sounds for over 30 years and has a credit list a mile long, which includes films such as Nightcrawler, Pacific Rim, Hellboy 2, Star Trek, American Beauty, and Shrek, among many others. His game credits include Doom (2016), Resident Evil, Epic Mickey, Gears of War, Resident Evil and Fable. These days, Gershin is Creative Director/ Director of Editorial at Technicolor and is the leader of its Sound Lab team.

Audiofanzine spoke to him recently about a range of sound-design related topics including immersive audio, how he creates sounds, his software and hardware tools, and more.

I remember walking around the most recent AES, and everybody was talking about their immersive products. Have you worked on immersive sound projects, and how do they differ from film or TV sound design?

Yeah, I’ve done a lot of projects actually—VR, MR, AR. I bet I’ve done probably 20 or more projects. For me, VR is a combination of what I know in film and gaming. Sometimes it’s interactive, sometimes not. One of the two popular forms of VR is VR 360, which is basically a linear piece with spatial audio played through the goggles or played through an app. You know, Facebook, YouTube, or something of that ilk. And then there’s interactive VR, which uses a game engine. It’s all about object-oriented audio. You take sounds, you place them in a spatial sphere, and you can manipulate when and how they’re played, similar to a game engine. But a lot of VR that’s not game VR is interactive storytelling, slash VR.

Talk about the interactivity between the viewer and whatever the VR delivery medium is.

There are many ways in VR to become mobile. Some of them you can actually walk around the room physically. Other ones you can teleport. “I want to now go over there, ” and then, you move over there. So, an object that you share — let’s say if there was a phonograph, and you teleported to the right of it, you’ll now hear the phonograph to the left. And if you turn around, it’ll be behind you. Think of sound objects — or sound sources that emit sounds — that become sound emitters (sound objects). Then it’s decided how and when those objects are going to play. And there are many attributes that I can control, like a mixing console, and then using some form of spatial technology — there are numerous ones — to let the audience know where that object is in that spherical space. Above you, below you, around you, stuff like that.

And pardon my ignorance on this, but when you do a mix for that kind of a project, how many channels is it, typically?

It’s not channel-based. It’s object-based. You could have 1,000 objects. If you’re in a room, think of how many items in that room would emit sound. Your window, the background, three people in the room, and then not only that but the emission of those sounds can also be based on where they are. Are they in the other room? Am I going to be putting in a low-pass room simulation on it? There are many, many ways, but we’ve been doing this in gaming for 30 years.

But the output to the user is just still stereo output?

There are a couple of ways to do it, but the most common is with headphones. As binaural. What special technologies are available on headphones? Well, you can do vinyl rendering or vinyl recording. You could do Ambisonics, first, second, third, order—whatever. There are many ways to deal with it, but eventually, it goes through what they call binaural render, which is really, two speakers. But there are certain areas now that there are some types of VR where you wear the computer on your backpack. And you roam in an open, free environment. Sometimes with headphones, sometimes without.

Wow. Crazy.

What’s great about VR is that it’s the wild, wild west. There are very few standards. It just keeps reinventing itself in better ways to work.

What is your main software when you’re mixing for this?

There are two big ones, they don’t do everything, but a lot of people use either the Unity or Unreal Engine. They tend to be very popular. Then inside of that, you can use their audio tools or just outside of it, and then end up using like (Audiokinetic) Wwise.

Okay.

Or FMOD or something like that.

I’ve heard of FMOD, used for games.

Yes, they’re typically the audio game engines. They’re like Pro Tools and Nuendo are for post-production.

And as far as creating sounds for VR goes, it a big difference from other types of sound designing?

No, I find the creation of sounds is very similar to what we do in gaming.

Give me a sense of how you go about when you have a sound to create like what’s your typical workflow?

Again, the workflow is similar to the workflow for gaming.

Okay.

You’ve got to go backward actually. First, you’ve got to identify the technology you’re going to use. And what tools are available to you. And what tools the client wants to invest in. From playback tools to spatial tools to how it’s going to be put together. You’ve got to kind of figure out the structure of how the game is going to be utilized from a technology standpoint. Once you understand that, it will give you the parameters of what you can and can’t do.

That makes sense.

At that point, we identify those areas that are linear, those areas that are interactive, and make some creative choices as to the best approach for a VR title. It’s not unusual for them to have both a linear component and an interactive component. So, you can approach it like in Ableton, where you press a button and something triggers. It could be a loop. it could be something or a sampler. Because there’s a certain amount of interactivity, when the user hits a button, moves into a place, walks into an object, interacts with an object, those sounds will be utilized like a sampler or Ableton. So, essentially, the user is actually playing a musical instrument without knowing it.

Right, but that’s more like the way the sound’s going to be delivered. What about the essence of the sound itself?

Yeah, so in sound design, there are many ways to do that. I still use Pro Tools. Some people use Nuendo, some people use Reaper or Logic. In VR / Games, some are designing in the game engine itself.

Interesting.

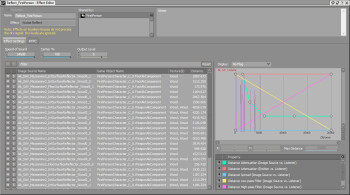

REAPER and the Nuendo are very VR friendly, but now Pro Tools has their new update, and they’re trying to work well within that space as well. I think everybody’s recognizing that there is a market in VR, and they’re trying to accommodate it. Some interface with Wwise, like Nuendo We can load sounds into Wwise from Nuendo. So, I really think that it comes down to using the more common workstations that you’re familiar with. There’s no right or wrong way to create sounds. Some guys use Soundminer. It comes down to what you’re comfortable with as your design instrument.

Soundminer?

It’s a librarian tool that you can also put plug-ins on.

Do you have big libraries that you work with for getting raw sounds to manipulate, or do you still get a lot of stuff in the field, or does it depend on the project?

It’s exactly the same way it is in post-production for movies and TV. It comes down to time and budget. Obviously, we’d like to record as much as we can, but sometimes the time and the budget don’t allow for that. So, then we go into our libraries and utilize the sounds that we’ve collected to that point.

Are you talking about your own library, not a commercial one?

Whatever works. I think when you get a great sound, it doesn’t have to be that you invented it every time. I believe that great sound libraries have a little bit of everything. There are some great third-party libraries out there that sound great, and they’ve spent time and money to go out there or go to a place to capture the sounds that might not be accessible to you.

Right.

So why not? I mean, my ego is not such that it has to be only those things that I’ve recorded. You collect everything whether you record it, whether it’s friend’s, whether you buy libraries. There’s no right or wrong way, it’s just getting access to great sound.

And then usually you do a lot of manipulation once you have the raw sound right?

Absolutely. I think what it comes down to is it depends on the kind of project that you’re on. If it’s a realistic project, where it’s kind of like a documentary, and we’re sweetening it, then there’s not a lot of manipulation. A door’s a door, and a background is a background, but if we’re doing something that’s stylized or something that’s futuristic, absolutely. Again, I think that’s what great about sound design is that it doesn’t always have to be about robots, creatures, and sci-fi. Creating a great soundtrack, even if it’s realistic, it’s all valid, and it’s all fun.

Right. But I assume if you’re working on a project that’s not a documentary, you might accentuate some sounds for dramatic effect?

Yeah, I did a piece called My Brother’s Keeper. And it’s about the Civil War, about two brothers, who end up on opposite sides of the war — North and South. And they meet each other in battle. And they recognize each other.

That’s intense.

A lot of times there are muskets and cannons, and people shouting and cheering, and you know, all of the sounds that you would imagine for the Civil War. But when we get to the section where they recognize each other, all the sound drops out. When we go into what I call “hyper-reality, ” where everything else is no longer important other than the two of them confronting each other and having to deal with that emotional dynamic. You know, are you my brother or are you my enemy? And going to that emotional arc, instead of taking on a realistic approach, the sound takes on an emotional approach. And sometimes it’s not always about what sounds you put in, but also about what sounds you decide not to put in.

I’ve noticed that there are several new software titles created for sound design that do sound morphing. Dehumaniser, is one of them.

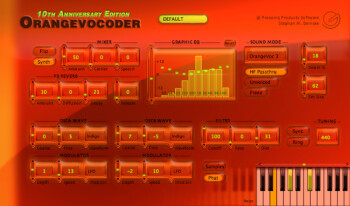

Yeah, you know what it is? There are some great plug-ins and sound tools. Doesn’t matter what industry you’re in, which are just wonderful. Orpheus, the Dehumaniser, and it’s just wonderful stuff. The Morph. The Orange Vocoder is coming back. And I think that it’s just great having access to great tools. And since the beginning of time we’ve always found a way to make interesting sounds, and some things make our lives easier and some things allow us to do things that we’ve never been able to do. And that’s the exciting part of sound design that there are these wonderful tools that are available to us to really to really stretch your creative muscle.

What’s the Orange Vocoder?

It was a vocoder that came out decades ago and is now a plug-in. Zynaptiq ended up updating it and making it even better and bringing it back out. And even adding features that didn’t exist on the original.

In the studio world, over the last few years, the quality of plug-ins has gotten so much higher that people are using them who wouldn’t have in the past. Is that kind of similar thing happening in terms of digital processing for sound design?

Yeah. For sound design or for music production, we all use the same tools, we just use them differently. I think Waves has great tools. FabFilter has got great tools. UAD has amazing tools.

For sure.

A lot of developers offer emulations of LA2As and 1176s and Pultecs, so you got to pick which flavor or variation or version of that classic hardware that you want to go with. But then there are developers making different types of plug-ins; for instance, Zynaptiq has this thing called Adaptiverb, where they get rid of all the peaks of the sound source and just really focus on the sustain of a sound and of course their wormhole plugin. There’s Fab Filter’s Pro-R, that basically lets you control the reverb time per frequency. Falcon plugin from UVI, Altiverb and Exponential Audio’s plugins are always a staple. Again, there are so many wonderful tools out there. I think for everybody my recommendation is always: download the demo. Play with it and see what makes sense to you.

What about field recording? Has the technology for that area changed a lot over the last few years?

Yes. I think that field-recording industry, it used to be mainly location sound recording, which was very expensive, and you just had to pony up. I think right now there’s a lot of companies that are making gear that is within the budget for a lot of sound designers, which is great. The first year everything was about $8,000 and then it turned into $4,000 to $2,000 and then Zoom came out with something for less than $1,000. And then everybody started finding solutions.

It really depends on your needs, mic pre quality, size, costs, a number of factors.

There are certainly a lot of portable recorders available.

I think the market for sound designers is probably too small for a lot of dedicated gear. But if sound designers are able to tap into other mobile recorders made for music, maybe they can use them or those used for documentaries or EPKs. Sound Devices makes great gear. There are so many good recorders and good microphones. It really starts coming down to how many channels do you need. What kind of mic preamps? What kind of dynamics for the mic pre do you need? Are you recording guns and explosions or are you just recording background, so you need very clean mike pre? Or are you just looking for something to capture with that’s very accessible?

That makes sense.

I have multiple recorders of all different types. I think I’ve got four or five recorders that I use, and it just really depends on what I’m recording, when I’m recording, and what’s easy and what’s accessible.

What do you have?

I’ve got a Sound Devices 788, a 744, a Zoom F-8, and a Fostex FR2. It’s big and clunky and they don’t make it anymore, but the mic pres on it are amazing. And then I’ve got a whole bunch of little mobile recorders such as a Sony D50 recorder. Different things for different purposes. For example, sometimes you don’t want to be seen as recording. Sound Devices has these new really small recorders called MixPre that are really small, as well as others. Stealth recorders.

What would be a situation where you needed to hide your recorder?

I did a movie, I don’t know how many years ago, eight or ten years ago. And it was about poker. I used a binaural mic that basically looks like earplugs or headphones. Sennheiser, I think just came out with a set as well just recently at the last NAMM show.

Roland had a pair of those too.

I think mine were Countryman. I could be wrong. Anyway, so I went in, put those in my ears, put the recorder in my pocket, and walked around Las Vegas. I had permission and recorded the Bellagio and Binion’s. I also used another recorder, where I had little DPA mics on my wrists like Spiderman, you know. So, as I directed my arms, I could do ORTF and XY and all that kind of stuff.

That’s crazy, wow!

Yeah, so I was walking around with just double mics and nobody knew I was recording because I wanted to record poker games.

Why is that guy walking around with his hands crossed? [Laughs] So you were trying to get the ambient sounds of a poker game, basically?

The filmmakers were adamant about making sure it was realistic. So Binion’s and Bellagio shut the music off for me. They knew where I was. And I would sit at poker tables, literally recording what was going on.

And were you playing at the same time?

Sometimes I was, sometimes I wasn’t. Sometimes I would just sit as a guest or whatever. And they used that a lot of times for the background and I started learning the difference in tables. And then I went after hours and got close-up recordings of individuals; the money, where the chips are; people chipping. I started recording pro players and saw how they were snapping the cards differently than any Foley artist would, or a person who’s not a full-time gambler. One of the things I love about recording sounds, not even just for sci-fi stuff, is being able to get into somebody else’s skin and get a taste or an observation of their life, or their world. I’ve done it with aircraft. I’ve done it with military. I’ve done it with beekeepers. You know, when I did Honey I Shrunk the Kids, I spent two days collecting honey with a beekeeper.

Yikes!

It was great! And then, he took the queen out and they started buzzing really loudly. And I captured that, which was a good main element of the bee flight of Honey I Shrunk the Kids.

Cool.

I’ve gone inside an airplane into the avionics and recorded all the servos and gears. For Pacific Rim, I dropped 80-foot cargo containers on top of each other. And they sounded like giant canyon drums. You have to use imagination to decide what you need. And I love doing the period pieces to understand what it was like. For example, historical productions that use muskets. I like understanding how they did what they did, how they loaded them, what the sounds were like because you want to be able to capture a bit of realism. The audience not only gets to see what it may have looked like but also what it could have sounded like.

Back to field recording, do you ever use a surround microphone?

All the time. Yeah, I’ve got two microphones I’m using, no, three. Three now. I’ve got the DPA 5100. I’ve got the SANKEN 5-Channel mike, whatever. And then I’ve got an Ambeo from Sennheiser.

And what are the typical applications for those? Capturing ambient background sounds?

You know, it depends. Sometimes I want to get distant. Yeah, it tends to be more background-based. I’ve recorded lots of rain lately. It’s great for recording background effects. Like when we were recording helicopters and an osprey showed up (it’s a long story). It just had a really great wop sound. It was about 30 or 40 yards away from where we were. We got a really great low-end thump because of the distance. It sounded better than the helicopters and we ended up using that for the helicopter sound. I’ve also used it on crowds, like in The Book of Life. I went down to Mexico and recorded a hundred people in a bullring, chanting, and in addition to stereo and mono mics, I used multichannel mics to get the natural ambient reverb and the slap of that place.

Very cool.

So again, you get sounds of an airplane taking off, and it flies overhead, and you get that really wonderful slap that a multichannel mike can get you.

Thanks, Scott!

You’re welcome!