Volume, more volume! Yes, but at what price? This series of articles will review the origins of the volume race, the physical considerations, new standards and tools, and their impact on public health.

Digital audio was undoubtedly a “blessing.” We won’t go through all its innovations here, because the space I have for this article wouldn’t be enough. Let’s take just a couple of examples: A multi-platform and free editor like Audacity, featuring Paulstretch, which will feed any sampler endlessly. Convolution software like LA Convolver or Reverberate LE that allow you to put your voice “through” a guitar, for free. Amazing Slow Downer, one of the best transposition and time compression/expansion software products, available for only 50 bucks. Mainstage ($30), a special app for live performances (although for Mac only), with heaps of (audio) effects, virtual synths and sampled instruments, plus a sampling tool stemming from Redmatica’s AutoSampler (the secret weapon behind many sample library editors…). Or take Auria, a 48-track DAW for iPad, that allows you to even work with video(!) and all with a flawless audio quality (for only $24,99).

But digital audio was also a “curse” because, even though you have more impressive and incredible tools at your disposal every day, audio quality has been constantly decreasing, with ever more compressed and distorted music and sound ─ the exact opposite of what makes music so full of life. And don’t forget about the impact of these practices on public health.

There’s something you must understand: If our environment and listening practices remain the same, we’ll end up with a deaf generation in 40–50 years! However, some professionals have been warning us about this aspect for several years. Even governments ─ well known for their quickness to respond to anything ─ have taken the matter into their hands. It’s never too late to do good.

But before explaining this dire perspective, let’s go back in time and learn some history.

How? Why?

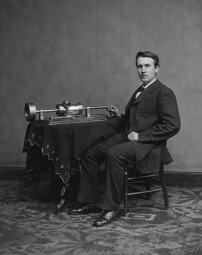

An obvious but necessary reminder: Recorded music is only the most recent way of disseminating this form of art. Before the first reproduction methods were invented by Édouard-Léon Scott De Martinville (the phonautograph), Charles Cros (the paleophone) and Thomas Alva Edison (the phonograph), the only way to listen to music was live, within a domestic or a dedicated environment.

And, once the recording means were available, the idea of “producing” music, sound, in the sense of modifying it after it was recorded unto a media, was completely out of place: The basic principle was to render as faithfully as possible what the musicians, composers, arrangers, and music director had painstakingly conceived, from the composition to the performance, including the orchestration and arrangement. Modifying the dynamics of an orchestra or instrument afterwards, simply because the overall sound wasn’t loud enough, was not appropriate (see Reverse Orchestration below).

However, the practice of gain/level riding (which could be considered as some sort of manual compression), was first used in film and music studios with the arrival of synchronized sound, to avoid overdrive distortion, peaks, or to avoid dropping beneath the noise floor proper to the media used. But it was at radio stations, especially, where this practice was more widespread, leading to the arrival of the first limiters, after technicians tweaked the amps in-house. As a consequence, in 1936–1937, the Western Electric 110-A, RCA 96-A and Gates 17-B were introduced into the market, liberating the operators from this task.

The first efficient racks date from 1947, when the General Electric GE BA-5 (a feed-forward limiter) came out, which RCA immediately copied naming it the… RCA BA-5. The first collateral damages appeared: The pumping effect and distortion. Not long afterwards, the RCA BA-6A tried to solve these issues. The appearance of variable-mu tubes in the '50s, and compressor/limiters with opto-electric operation (Teletronix LA-2A) or field-effect transistors (Urei 1176) in the '60s, together with the first multi-track recordings, changed volume management — before, during and after a recording — forever.

Reverse orchestration

Obviously, the faithful reproduction of what was heard wasn’t the only direction taken. And it was in film where the first experiments took place. I’ll give you only two examples, in France, Maurice Jaubert, the first real theorist on film music, addressed the need to rely on technical developments to provide a different sonic reality, applying his theories (for a noteworthy example, watch the dormitory scene in Jean Vigo’s Zero for Conduct, 1933).

In Russia, Prokofiev, a composer who worked closely with Sergueï Eisenstein, practiced “reverse orchestration”* thanks to the placement of microphones. It allowed the possibility to distort or highlight an instrument above an orchestra in a way that was impossible in reality (a bassoon soloist above a triple forte tutti of an orchestra, for example). See, for example, Alexandre Nevski (1938).

* According to the term coined by Robert Robertson in Eisenstein On The Audiovisual, IB Tauris Publishing, 2009.