Mastering, like mixing, is an art form that requires good musical judgment and entails a great deal of decision-making. Just ask engineer Nathan Hamiel (pronounced “Hamel”), who does both mixing and mastering, as well as recording, at The Freq Zone, his facility in Jacksonville, FL.

Hamiel’s client list includes bands like Saliva, Guttermouth and Molly Hatchet, as well as the punk label Bird Attack Records. In this interview, Hamiel covers the gamut from the Landr.com online mastering service to how he handles his clients. He also addresses some specific mastering issues and describes his hybrid hardware/software mastering rig.

I was interested to see your blog post about the Landr.com auto-mastering, and why it can’t come close to doing what a real mastering engineer can. I totally agree with you, by the way.

One of the reasons I wrote that blog post is that I got tired of arguing with people and I wanted to sum up my thoughts about it. There are hundreds of different decisions that happen during the mastering process that can’t be translated to an algorithm. Why? Because an algorithm doesn’t have any context. Even if there are genre-specific presets, it still doesn’t know what kind of music it’s processing. It’s taking parameters and it’s spitting something out. The problem with using an algorithm to process, is that it’s very indiscriminate, especially at the mastering phase. Every single processing decision that you make has benefits and drawbacks. So if you’re correcting something, it may sound better in the end, but there are drawbacks that you’re also injecting into the process.

That makes sense.

Say an EQ for instance. People take that for granted. But an EQ has processing side effects. Depending on the type of EQ, you have phase distortion and ringing distortion…Same thing with multiband compression. If I’m mastering a track, and I put a multiband compressor on the track, I’m doing that because I want to focus on a certain area or certain areas, plural, where it may benefit from some processing. It’s either going to fix a problem or it’s going to hold things together better.

You don’t want to compress the entire spectrum.

Maybe that is a good decision, maybe it isn’t. So, when you’re writing an algorithm, you’re like, “Setup this matrix of multiband compressors and process at this level when it hits this particular threshold. But as a human, the upper midrange could really use some compression, but everything else, I don’t want to touch. So basically you turn off all of the different bands for that. So what that’s doing is putting in a filter matrix for that, and that filter matrix is determining where the cutoff points are for each band. So the more you add in there, even if those bands aren’t actively compressing anything, they can be adding artifacts to the sound. So I would say that mastering is supposed to a be very purposeful and very deliberate processing. You should be listening to the music saying, ”This is something I’d like to change." Changing it saying, “Is that better or worse?” And it’s a whole process of evaluating those as you go through, which is only one part of the mastering process. That doesn’t include everything that happens up to those processing decisions, like error checking, and is the mix even good enough to be mastered, does it need to be remixed? Are there imbalance issues in the mix that won’t translate well in the master? And all that stuff that happens before you even get to applying EQ and compression, and limiting and all the other things.

What are you looking for with error checking?

Any kind of clicks and pops that could be accentuated by the processing. Maybe there’s real problems with the file. Maybe there’s clipping happening that shouldn’t be in there. Maybe there’s an imbalance to the file. Maybe for some reason it’s lopsided. A lot of times imbalances are caused by people overloading one side of a mix. For example, if the hi-hat is blaring in your face and it’s panned to the left. It’s not as easy as, "Hey, lets bring up the right side a dB.” That’s because it’s caused by a specific instrument in a mix and not necessarily that they printed through some analog gear that was imbalanced.

I guess a lot of the tricky part is when you have to get inside the stereo mix and try to adjust certain elements without harming the rest too much. I would assume you’d use MS [mid-side] for that a lot? I assume in your hypothetical example of the hi-hat causing the image to be imbalanced, you might use MS set only on the sides and then try to EQ within a very narrow band?

You can try things like that, and I do. I use MS processing when it’s necessary. I try not to think like that, because I don’t want to get myself in a position where I’m like, “I’m just going to throw this in MS mode and start tweaking some things.” I like to think about what is the problem I’m trying to solve. For example, say there’s something very harsh happening. Where is it happening? Is it broadband? Is it in the left or the right? Where is the problem, and can I solve this problem without damaging too much?

In my very limited mastering experience, if there was something like that hi-hat situation, I would think if there was a way to get in and lower that hi-hat without compressing or EQing that frequency across the whole mix.

Yeah. And realistically, so many people are using stereo effects, and they have things spread all over, so that hi-hat may be sitting in the same frequency range as some stereo effect or like the higher part of the guitar or something like that. Those things are living on the sides as well. Is this better than it was? Even if you negatively affect something, is it better than it was? I try to take notes of things. “I went through and did it and I think it sounds good, but if you think that this isn’t bright enough, here’s why, and if you want to remix this, go ahead and do a mix revision and send it back to me and I can try to liven things up.”

That brings up another point: One of the very few advantages of people who are mastering their own material is that they can easily reopen their mixes to fix things quickly. I assume there’s a limited amount of instances where you can ask a client for mix revisions. Or maybe you listen to everything first and say, “These mixes aren’t going to cut it, you’d better fix this before I start.”

Yeah, and that’s typically what you do. The first thing you do, which is funny, because I see all these people putting out “how to’s” and “how to master your own tracks, ” “how to do this, ” “how to do that.” Some people have become very famous on YouTube for telling people how to master. The very first thing that nobody ever mentions is, how about listening to the song? You have to listen to it first. That’s the very first step. Because you usually only have one or two opportunities to get that kind of initial impression. Typically, when I’m doing my initial listening and error checking, I’m jotting down notes really quickly. Like, “The low end is too high on this, it’s going to need some correction.” “It could probably use a bit of stereo width, it feels kind of narrow.” I take those notes as I’m listening to the song. “The song sounds very well mixed and well balanced, it’s going to master well.” Or, “Somebody has these instruments all out of balance, there’s no cohesion whatsoever, and they really need to go back to the drawing board." And at that point, I don’t have to put any more work into it, I turn it over to them and say, “Hey, there are some issues here.” When you’re mastering your own stuff, you have to be really disciplined. Because if you’re recording and mastering and mixing your own stuff, you probably did it in the same place. You don’t really have the benefit of perspective.

Absolutely.

So if there’s a problem that started in the recording phase and persisted in the mixing phase, the odds of it being caught in the mastering phase are pretty slim. These days, I think the concept of using a mastering engineer has been diminished, and not just because of services like Landr. People who are of working on their own music are like, "Why am I going to pay someone to do this. I have an EQ right here in my DAW.” And I think that’s a really poor perspective, because part of the reason you’re sending it away isn’t just because the person is going to add EQ to it. You’re getting the benefit of their room, their monitoring system, and their experience. And even if it’s not experience. Even if it’s just a different set of ears on the project, that’s really kind of what you’re paying for as well. Those are your “value adds” of having somebody else work on it. I’ve been recording for quite a long time, and I have tons of stuff out there that I regret, because I either tried to do it myself, or I got tired of it and I rushed through the project. It’s embarrassing. Nothing is worse than having regrets, as far as that stuff goes.

For sure. I had a couple of situations where I recorded projects myself and sent them to mastering engineers, and both times, discovered that something about the acoustics in my studio cuts the bass that I hear, so I tend to overcompensate. And I wouldn’t have known that except for having sent it to a mastering engineer who had an accurate listening environment.

I think it’s more about building up a relationship. I have been dealing with a client who I actually met at NAMM this year. And I’ve had these long conversations with her. And she approached me, and was asking about mastering, and she said she spent a lot of money getting her album mixed and mastered, and she just didn’t feel that it sounded right, and she didn’t know how to articulate what was wrong. She was like, “Hey, it doesn’t feel warm and inviting.” Those are the adjectives that people tend to use. So I told her, go ahead and send it to me. She told me that she’d sent it to some Grammy-winning mastering engineer who ended up doing really poor job on the master.

Wow, that’s surprising.

You really need to create a relationship with the person mastering the music, because everybody refers to things differently and describes things differently. And at the end of the day, we’re in a service industry. I’m going to do what you ask me to do. Even if I think a song is too bright, if you ask me to make it brighter, you’re the customer, I’m going to give you what you want. You have some artistic vision, maybe I just don’t realize it. You’re asking me to do something, and I think that is an issue with people trying to interject their own artistic vision on the customer’s materials, which is not theirs to interject upon. I think learning the lingo is important. I have one person who refers to compression as “being crispy.” And I have one customer who refers to midrange as “compression.” So it’s learning what they mean when they’re explaining that, and that takes a little bit of time. If you’re like, "I’m going to send one track out and see what a mastering engineer does with it." And if you send that mastering engineer no context, nothing that you’re going for, nothing to use as a reference, they’re going to do what they think that you want, and you may get it back and say, “This doesn’t sound anything like what I expected.”

And isn’t a lot of it, though, how it sounds from one track to the next on an album anyway. That’s part of the whole thing, to give it a consistent sound, right?

Well, I mean, maybe. Once again, you’re working for the customer so you may have a wacky customer who says, “I want every song to feel like a different band is doing it. I want it to feel like a compilation album.” [Laughs]

But as a general rule…

You want it to be cohesive at a minimum from a level perspective. It shouldn’t have a whole bunch of level jumps.

What about consistent EQ?

That too. And that’s where it’s kind of tricky, because with albums nowadays, an artist may have recorded it at different studios, so each mix might sound pretty different. Or they’ve spent a year recording it themselves, and throughout the year, maybe they’ve bought new gear. “I’ve got this new microphone, now I’m going to record with this.” “I’ve got this new guitar, and now I’m going to use this on this song.” So, being the fact that a lot of bands aren’t hitting the studio and knocking out an album. They’re doing it over the course of a year. Things naturally sound a little less cohesive on an album. But the goal is to make it sound like an album, not a collection of songs.

What do you think about mastering software like Ozone and things like that, which give you the ability to work on it yourself and open a preset and then tweak it from there?

I think I see all of these as just being tools. I use a combination, a hybrid setup. So I’m using a combination of software and hardware in conjunction with each other. They’re all just tools, getting you to the end point. I think the software has really upped its game from a quality standpoint over the last few years. So tools like that are great.

What do you use software wise?

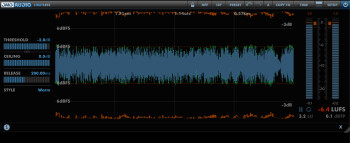

I use a lot of different things. I do have Ozone 7. And I use probably the Dynamic EQ on that, most often, and maybe the Linear Phase. I like it because I can just go in there and hit “Mid-Side Mode.” I also use the new IRC IV algorithm that they have in their maximizer, whic is really, really good. You can get some pretty decent level increases without too many artifacts. Another piece of software that I like a lot is DMG Audio Equilibrium. It’s a great EQ plug-in. And DMG Audio also just released a new limiter called Limitless, and that thing is a beast. You can shoot yourself in the foot with it really easy. But if you know what you’re doing, it’s definitely a beast.

What do you use for sequencing the tracks and setting the spacing between them and all that?

I use two different workflows for that. When I’m doing the initial processing of each of the WAV files, I’m typically in, or lately I’m in PreSonus Studio One.

In the Project Page?

No, the good old Song Page. The reason that I can’t use the Project Page, and that I can’t use the other program that I use, [Steinberg] Wavelab, is because as part of the mastering workflow, you want to A/B your mix, to be sure you’re making an improvement and you haven’t taken a step backwards. Well, I have a Dangerous Monitor ST, and I route one output in the raw mix to the other, so that at any given time I can hit that button and hear what the mix sounds like. I don’t have to go through and click plug-ins, I don’t have to find windows, I don’t have to do any of that stuff. It’s very easy. When you’re mastering those little moments like that are the ones that make all the difference, because you’re going to hear things that you didn’t hear before.

So you’re A/Bing between the unprocessed and the processed.

That is correct.

What do you use for monitoring when you’re mastering.

I have a pair of Focal SM9s, with a Dynaudio BM14 or 15 MK II sub. Those are the main monitors.

You have other Dangerous gear in addition to the Monitor ST, right? Do you have the Dangerous Compressor?

Yeah. I have quite a bit of Dangerous gear. I have the Dangerous Master and the Dangerous Liason, that’s my mastering backbone basically.

What does Liaison do?

Liaison is like a push-button-style patch bay kind of a thing. Basically, it allows you to use your hardware kind of like you’d use plug-ins. So you can quickly A/B, you can quickly turn things on and off. It has two busses, so you can add things to one bus or another bus.

So you can use it to patch hardware into the mastering signal path?

Exactly. You pre-patch your gear into it. And then you can selectively add or remove it from the signal path. For me it’s indispensable. If you’re going to use hardware as part of your mastering process, you have to have a piece of gear like that. Because you can’t take the time to turn around and unplug something from a patch bay and then re-listen to it and then plug it back in, you’re going to forget your perspective on the material during that time. I can do mid-side and parallel processing both, with those two pieces of gear in conjunction with each other. And then I have a Dangerous Compressor and I also have two Dangerous Convert-2's. One of them feeds the analog mastering chain and the other one feeds my monitor path.

Those are converters?

Yes. Those are the DACs.

Do you find that those converters do something positive to the material, just by running it through them?

The converters, yes. It’s all pretty clean, which is what you want in a DAC. It’s hard to explain. I did a DAC shootout when I got my first Convert-2, and the way I kind of explained it is, I’m a scientific guy, I like to understand how things work. And there are a lot of different adjectives you can use to describe it. The imaging’s a bit better, it feels more cohesive. But it was something about the music that just felt better. I don’t know why, I don’t know what is happening or why it feels better. But at the end of the day better is better and that makes me happy.

Interesting.

And the other thing about converters is: Every single audio decision you make is cumulative. So, if you have two analog to digital converters, let’s talk ADC’s for a minute. And you’re like “Well, these two converters are very similar. This other one is cheaper, it doesn’t quite sound as good, but it’s still small and different, it doesn’t matter to me.” So what happens when you record 30 channels of input? If you make a few bad decisions, they add up. I shouldn’t say ”bad, " I should say “poor.” Poor audio decisions add up the same way good audio decisions do. And if these converters are just a little bit better, or this piece of gear is just a little bit better. All of those things work together to make a better result, so at the end your almost imperceptible difference becomes a perceptible difference because you’ve made those decisions.