In this final installment of the series, we present a Q&A with Dr Thierry Briche, ENT specialist, and the former chief of the prestigious Val-de-Grâce Hospital in Paris, France. He was kind enough to talk to us about the ear, its architecture, risks, and potential injuries.

Thank you for accepting this interview. How would you describe the audio path, the ear, and its particularities?

First of all, you need to think of it in terms of the transmitter, be it a musician, a tape recorder, a personal music player, etc. The problem is the quality of the music, which doesn’t have to do with the transmitter, but is nevertheless a part of the message. The way you have to think about it is that it’s a problem of the transmitter, the message and the receiver. We first need to talk about the transmitter, which can take different forms. Take an instrument, for example, in an open space, a public space, like a stage. That’s where the quality of the work of the architect intervenes, the acoustic quality of the music venue, the reverb coefficient, etc. We all know there are pretty bad venues, especially those that are multipurpose.

And then comes the message…

It’s obvious that modern music, especially EDM, is more appealing due to the presence of low frequencies. Another well-known aspect has to do with the beat of a song, which has to be close to that of the heart. Because it produces a well-documented trance effect. Some surprising experiences have been carried out, like filtering the music during parties to only let low frequencies through. The result? People don’t change their behavior right away. It takes them some time to become aware that there’s no actual “music” sounding. In such cases, it’s easy to realize that music is only another message. Next, we have the so-called Tullio effect [after Italian biologist Pietro Tullio, 1881–1941, who discovered it experimenting with pigeons], which has to do with the vertigo that a sound wave can produce at high volumes. I even found out about a bassist who literally dropped dead after suffering vertigo while playing right next to the speakers, due to the Tullio effect.

How would you explain this phenomenon?

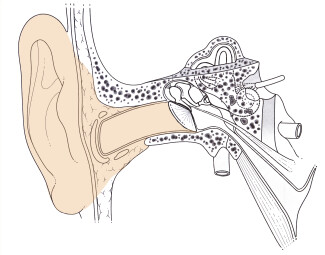

It’s the intensity. The intensity is such that it impacts the tympanic system, giving rise to loss of balance, dizziness. You know how the auditory system works: As I speak to you, air molecules are excited one after the other. Each molecule stops as soon as the next one is stimulated. This makes your eardrum vibrate, but, contrary to what you might think, it does not vibrate homogeneously, it vibrates differently depending on the frequencies.

Does it vibrate at the same frequency as the frequency transmitted?

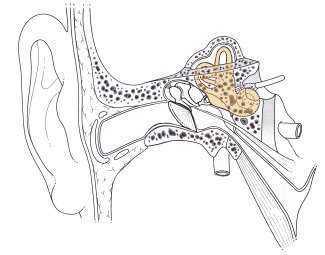

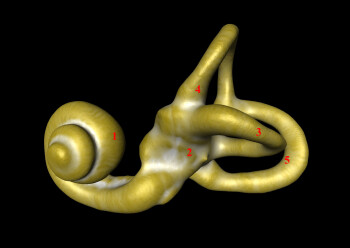

No, not really.. From the studies available, it seems that the eardrum vibrates at different locations according to the frequency of sound. Another important thing is the transmission of sound by the ossicles through a sort of lever effect that amplifies sound. The last ossicle ─ called stapes ─ is like a stirrup, with a foodplate that rocks back and forth in the oval window, compressing a fluid. This fluid is set into motion along the cochlea, a sort of snail. So the pressure applied goes up first and then down. This valve does not work linearly, and the compressed fluid sets in motion the spiral organ, a neuroepithelium dedicated to audition. Frequencies are ordered like on a piano, like octaves, one after the other. And, from a physical point of view, every octave uses the same location.

On the basilar membrane?

Yes. If you were to unroll this membrane, it would be like a piano keyboard. It is about two and a half turns long, with the lowest frequencies at the end and the highest ones at the beginning. That explains why high frequencies are always the first affected, since they are closer to the exterior. Some sort of frequency syncing takes place on this membrane. In other words, a part of it vibrates according to the frequency heard. That’s what’s called tonotopy: Each frequency makes one and only one region vibrate, to stimulate the zone that detects the frequency. So, when you have an injury, you lose some frequencies, but the neighboring frequencies aren’t necessarily affected. That explains the gaps in the auditory message.

For the receivers?

It’s important to understand transduction, the transformation of a physical message, that is. My voice is an air column that vibrates, travels through air ─ the eardrum, the ossicles, the tympanic cavity ─ until the last ossicle compresses the fluid and then it goes into a liquid medium. The transmission is not one to one between air and fluid, transmission from one medium to another is quite bad, just think about how you hear sounds underwater. So nature invented two systems to amplify sound. First the lever arm of the three ossicles and, especially, the difference in size between the eardrum, almost as big as the nail of your thumb, and the stirrup’s footplate. This difference results in an amplification of sound, like the small surface of a jack allows you to lift a car.

Old physics tricks…

Yes. And we are subject to potential injuries in all of these different parts.

Mechanical damage.

In the cochlea it’s always mechanical. And there’s another thing, regarding the frequency that resonates with the audio message emitted. Theoretically, the ear can hear from 20Hz to 20kHz, but the frequency range studied goes from 125Hz to 8kHz. The rest is considered useless in our domain.

It’s not used for comprehension?

Broadly speaking, we only study between the first and second turn of the cochlea. Within these limits, the only interesting frequencies for what is called socially useful audition are between 500Hz and 2000Hz.

Really?

Yes, that’s what we call conversational frequencies. But they vary greatly depending on the language. So, for instance, French has a very narrow spectrum, since it’s rather monotonous. Poles are said to have the widest spectrum. Some have even pointed out that Poles are the best fitted to learn foreign languages, thanks precisely to this wide range. But let’s go back to transmission. The sound pulse, once in the fluid, sets in motion a very specific part of the detection organ. So, for each frequency, there is an inner hair cell that typically decodes a frequency, 4365Hz, for instance, and three outer cells that amplify it. Remember that, from a geographical point of view, every octave has an equivalent surface in the cochlea. So, for an octave of low frequencies, you have much less hair cells than for a high-frequency octave. That’s why a topographically well localized injury can produce a lot of damage, since there are too many frequencies in a very reduced space.

And that’s why we lose more high frequencies than low frequencies.

And even more so if you consider the elasticity of the medium: Low frequencies are decoded last, because the membrane is more and more rigid, so it vibrates less. At first, the membrane vibrates a lot, so it can get damaged quite easily if the intensity is too high. We are talking mechanical damage here. It’s only at the level of the inner hair cells that transduction takes place, in other words, the passing from a mechanical to a nerve impulse. Don’t forget that we hear with the brain, not the ears. The nerve impulse gets to the dedicated zones through the different relays and that’s where the message is decoded and understood. Thus, music can be qualified as melodious noise. And it is generally conceived as such, in the sense that it’s not unusual that we can’t stand the music of others.

And what about traumas? I often refer to the acoustic reflex and the problems linked to the latency of its operation.

Yes, 100ms, more or less. Take a blast, an accident, for instance. in such cases it’s short-circuited because the sound wave is faster than the reflex; the ossicles could dislocate, destroying internal structures.

Could that happen even without being exposed to a blast?

Diving, for example. Especially if you have a cold: A clogged nostril affects the left ear-right ear balance, one of which could suddenly get obstructed, producing a very violent stimulation of the stirrup, which could harm the inner ear; sometimes irremediably. Don’t ever think of diving when you have a cold, nor on your own!

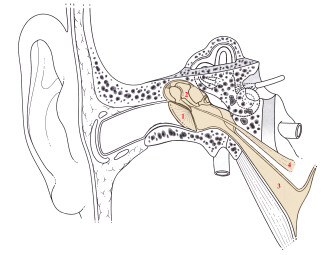

The same applies to music and the Tullio effect. Although we have never heard of similar injuries to the ones I mentioned before after a concert. At least I haven’t seen one, but that doesn’t mean it has never happened. When it comes to medicine, you should never say no. Furthermore, injuries due to music, to sound, often happen during the transduction. At the top of the inner hair cells there are a sort of lashes that are displaced when the stimulation frequency corresponds to the cell frequency. This displacement brings about a difference in potential between the top and bottom of the cell, like a piezoelectric quartz. Chemical substances are liberated at the bottom of the cell, especially glutamate, which stimulates the beginning of the nerve fiber and generates an electric message. The glutamate is reabsorbed after the stimulation.

There is a relationship between the liberation of glutamate and the audio message. When the message is too intense, there’s an excess of liberation and, in a way, an irritation of the nerve ending. This can generate an audio burst which could take its toll, because the cell can’t reabsorb all the glutamate, so it won’t be functional any longer and, hence, will not be able to decode the frequencies that correspond to it. The glutamate becomes toxic for the cell, producing ototoxicity. The nervous tissue does not regenerate, it’s gone. And there are also ototoxicities due to medicines, with sometimes even more brutal effects, like the total loss of audition after the accumulation of a product and the exceeding of the threshold.

Is it unreasonable to say that generation Y (that of mobile and overcompressed music) is at risk of becoming deaf faster than the previous one?

You need to consider listening behaviors, the consequences in time and possible harmful effects. The real issue here is how music is used, it doesn’t have to do with a group that has a special sensitivity. It’s an educational problem, both on the side of the transmitter and the receiver. We are unfortunately submitted to marketing dynamics…How do you create an auditory reference? We took 1000 persons who had good hearing. We made them listen to frequencies one by one, asking them to indicate us when they started hearing the frequency and when they stopped hearing it. This resulted in a non-linear curve.

The equal-loudness contours?

Correct. Then we asked them to tell us when the frequency began to be painful. And so we realized that it’s different for every frequency. People tend to have a lot of tolerance in the mids. Sounds in this frequency range are picked up very fast and can increase in level quite a bit before they start becoming painful. However, the low and high frequencies become painful pretty fast. We set the 0dB curve as the one corresponding to the lowest intensity heard by this group of people.

And it isn’t absolute silence?

No. Other studies carried out, especially by the military, demonstrated that the 0dB mark at 6000Hz was inaccurate, that we were wrong. The results at other frequencies do correspond to what has been published in the ISO standards, but the 0dB at 6000Hz is overestimated, it’s too low. That’s why when we made some tests with young people, they showed a dip at 6000Hz. And thus a legend was born: the Walkman damages the 6000Hz frequency! But it was a measurement error and not a generational and/or technical phenomenon.

Wow…!

And then there’s aging. The first frequencies lost are in the upper ranges, the ones closer to the exterior. Take a classic representation, the audiometric curve, with and horizontal bar between 0dB and 30dB, and it bends progressively towards the high frequencies. Degradation begins at around 50. And most people have similar hearing capabilities in their seventh decade of life. Psychological, mechanical and/or cortical aging, similar to what happens to sight.

The eardrums turn stiffer?

No, not really. The eardrum can become stiff very early, during infancy for example, without that affecting your hearing capabilities. Coming back to the issue of deafness in newer generations, the main problem has to do with exposure time. Too loud for too long. Once again, it’s an educational problem. By default, personal music player ought to be used at the lowest possible level. But then there’s the hedonistic problem: I love to hear music at loud volumes! People come to me and say: "Chopin has to be listened at high volumes."

I sometimes ask myself why people want to listen to a recording of a piano louder than an actual piano can sound?

It’s once again a problem of education. And there’s also the placement of speakers in raves and concerts, where people stand right next to them and get the sound blasted directly into their ears. Music is often played in places that weren’t conceived with that use in mind. I can understand the quest for hedonism and trances, and it’s okay. As long as it’s not dangerous! People have to be aware. Education and prevention.

Some people distribute earplugs at concerts…

Because they were forced to! But these earplugs aren’t necessarily the best, even if 10dB between 100dB and 110dB is much more than between 30dB and 40dB, since its logarithmic. Once again, education and prevention. Don’t forget a very important thing: Ear injuries are, most of the time, irreversible.