Today we'll continue our series on the volume wars. This time, we'll focus on mainly on compression.

[If you haven’t yet read part 1, you can read it here.]

After proving indispensable for radio (and incipient TV) broadcasters, the compressor/limiter started to take a much more important role in musical productions. The advent and standardization of multitrack recording, in which the band wasn’t a single entity anymore, but rather a group of musicians being separately recorded, allowed better control of the volume and dynamics of each recording. The ability to focus on individual multitrack tracks opened up more processing options like EQ, panning, etc.

Multitrack recording made possible a more eclectic combination of instrument types. The mix of acoustic, electronic and synthetic music (due to the arrival of the first synthesizers and electric and electromechanical instruments) called for a different approach to mixing, and a different treatment of the elements available. The sound of a band or an orchestra no longer depended entirely on the art of orchestration and the position of the musicians with respect to each other, but rather on the introduction of instruments that could be amplified to eclipse all the others together.

Home on the dynamic range

“Now that’s what I call dynamic!” How many times have you heard people use that term to describe overcompressed music ─ that is, music that sounds pretty loud, even at low volumes — when they’re actually describing the exact opposite of what dynamics means? Dynamics, or to call it by its full name, dynamic range, actually comprises everything between the loudest and quietest parts of a song or sound.

Good dynamics are the responsibility of the musicians, first and foremost, because they’re the ones who create the nuances in the music, which are at the core of any performance. I often mention the absurd example of a pianist who plays all notes with the same intensity and an audio engineer who records him or her with 88 microphones, one per key, and creates dynamics by lowering or increasing the level of each mic (which would obviously not be enough, but remember that I resorted to the absurd to make a point). Audio overcompression (which shouldn’t be mistaken with data compression, which makes files smaller in size) downright annihilates the expressiveness of a musician, the nuances in music, with dramatic consequences to sound itself and to our ears.

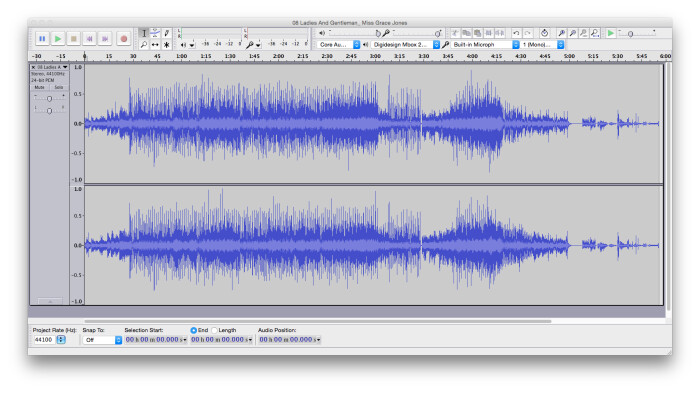

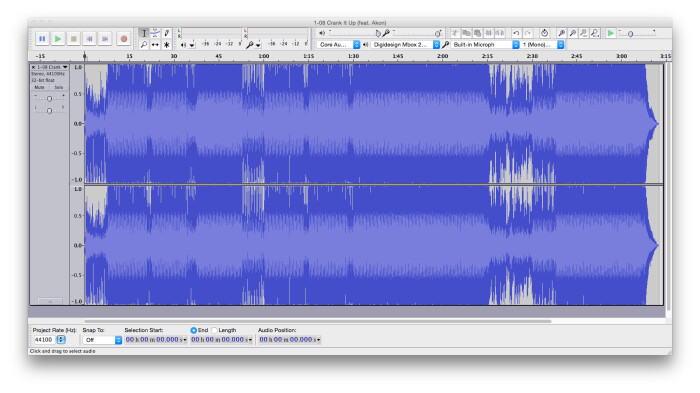

What can justify going ─ in only 30 years, and after many decades of careful and dedicated work with audio ─ from this:

To this?

Compressing what?

As obvious as it seems, you can still find people confused about the notion of “compression” today. That’s due to the same word being used to describe two totally different processes. One is dynamic range compression, which results from using dynamics processors like compressors, limiters, expanders, transient shapers, etc. And the other is data compression, which is a process applied to a file when you wish to reduce its size to facilitate its transmission, especially online.

Dynamic range compression allows you to reduce the difference between the loudest and quietest passages of an audio file, which results in the potential increase of the overall volume. One of the fundamental causes of the loudness wars is that everyone wants their song to sound at least as loud, if not louder, than songs that are heard before and after it on the radio, in the club, or mobile devices. As a result, mixers and mastering engineers are squeezing every decibel they can out of the songs they work on, via the heavy use of limiters, which produce the most extreme form of compression. The result has been an escalation in volume and a consequent reduction of dynamic range, as the volume peaks get crushed in order to get the average level as high as possible without clipping. The two waveforms shown above are a testament to how things have changed in terms of loudness.

The other type of compression, data compression, is a completely different animal, and is not an audio processing effect. It was conceived to find a solution to the low transfer rates of the first communication protocols on the Internet. It allows you to significantly reduce the size of a file and, thus, the necessary transfer rate to send it from one point to another (or to stream it).

For audio, as for any other media, the rate is measured in kilobits per second (kbit/s or kb/s, except for multi-channel transfers at very high resolutions). For example, the transfer rate for a 16 bit/44.1 kHz stereo Wav or AIFF file is about 1412 kb/s (and 2117 kb/s for the same file with a 24-bit resolution). When you say 128 MP3, 256 AAC, what you are actually saying is the file has a transfer rate of 128 kb/s and 256 kb/s, respectively. When you compare that to non-compressed audio files (remember we are talking about data compression here), it’s easy to understand that lots of things have gotten lost on the way. And what are the consequences, in terms of audio? We’ll look at that in the next installment.