In this new installment we'll continue discussing the consequences of volume on music, sound and human ears, and we'll see how digital technology has enabled the reduction of dynamic range in recorded music.

After several articles dedicated to the causes and consequences of the volume war, we can finally start to see everything more clearly, especially regarding its origins (and don’t worry I’ll look into all its consequences in due time).

Loudness is a controversial topic that often raises heated discussions. And some people use it as a way to attack some music styles, implying that one is better than the other (which was never the goal of this articles, obviously).

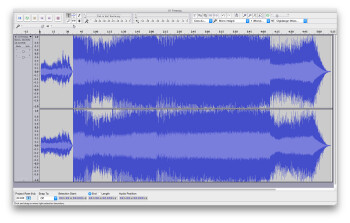

Let me just state for the record that absolutely nothing ever justifies crushing, destroying sound (with overcompression and distortion) when you are producing music. A techno/EDM track (to avoid going into sub-sub-classifications and tags, whose only goal is marketing) that is correctly mixed, respecting the dynamics, transients, the frequency balance and any other technical considerations, will sound better at louder volumes than one tailored according to the current mastering trends. It’s pretty rare to find anything having to do with aesthetics that doesn’t have some dynamics. And yet, many albums of all sorts of music styles are terribly “produced, ” with a dynamic range that rarely surpasses 5 or 6 dB.

And that applies to everybody, even to the most watchful musicians/producers. Take, for instance, Trevor Horn, one of the great producers of the last three decades, and whom we have mentioned in previous articles. He released an album called Made In Basing Street under the name Producers, with a sound halfway between retro and modern pop. But unfortunately it’s impossible to listen to. The overall dynamic range? 6 dB, with intersample clipping, etc.

Intersample clipping? It’s one of the risks of digital-to-analog conversion, when the reconstruction filter produces levels exceeding 0 dBFS. Watch this video of FabFilter explaining the phenomenon.

Another result from these discussions is the idea that we can accept dynamics compression given today’s listening conditions (and I’m not talking about dynamics compression used during mixdown or in a creative way, with sidechains and so forth, but about the one used in mastering, which doesn’t even deserve the name). Once again, nothing justifies reducing the dynamics of a song for the sake of being able to listen to it in your car or with a portable music player (through earbuds or headphones, obviously). What about when you are home? Should artists start delivering a mix for mobile listening and another one for home use? Nine Inch Nails did this for one of its latest albums (without making any direct reference to the distinctions stated above). If the solution is to make a dual mix/mastering the standard, things can start getting crazy really fast (why not even supply three or four mixes? One for the car, another one for the subway, etc.).

Listening evolution

One of the main problems, directly related to what I’ve mentioned in previous articles, is a change of habits. Let me be clear: I’m not saying you should be attached to the past, nor am I questioning the evolution of society or the easily identifiable benefits of progress. However, and without any need to mention Orwell, Bourdieu, Ellul, Michéa, or Kurzweil (and even Phillip K. Dick), you surely know that the possibilities offered by a technical innovation don’t always result in their adequate use.

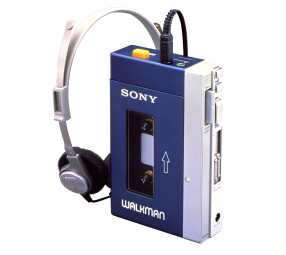

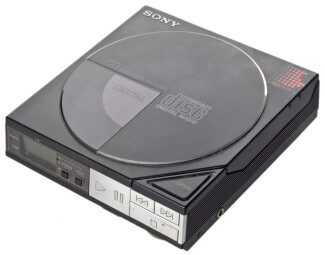

Music listening has become mobile through time. First with portable radios (often with a very poor sound quality) and then the Compact Cassette, introduced in 1963, and the Sony Walkman (around 1980) with the associated flutter, crosstalk, and background noise. The arrival of the CD Walkman (or Discman, like the D-50 from 1984) obviously improved the issues related to the media, but by no means did it do the the same with the music. That is, if you can speak of “issues” when talking about nuances, dynamics: Be it on the streets or any other place, even with the best player and the best headphones, you simply can’t fight environmental noise, which means a part of music will invariably be masked by it.

Squaring the circle

The arrival of digital technology answered one of the issues posed by the compressor, and its close relative, the limiter. In the hardware world, no compressor/limiter can act in real time. Remember: A compressor is an automated volume fader, whose action depends on the analysis of a given signal (whether it’s the one being processed or another signal, via the sidechain, for instance).

No real-time analysis can be predictive. So, even the fastest compressors (the 1176 LN, for example, 2 µs…) will let part of the transients through, preventing an actual flattening of the peaks (although there are several solutions for that, when you have a sidechain available, requiring some contortions and not without the absence of risks…).

In the digital realm, while it is still impossible to make predictive real-time analysis, you can always consider the time it takes for the signal to be analyzed and processed, to then delay the audio signal in the same proportion, to give the impression of real time. Make the test: Take a project with lots of tracks, add gradually compressors (capable of so-called “real-time”processing), and see what you get in terms of latency with a MIDI keyboard.

And this leaves the door open for people to crush sound at will. Without fear of exceeding the fatal 0dB FS (but without really having to take the dB FSTP into account, see above and here). While not all producers jumped at the opportunity with the arrival of the CD — some tried to stay as faithful as possible to the original sound — the habit of massacring/compressing everything quickly has caught on