In the previous article, we took a first look at sampling, from the point of view of Pulse Code Modulation (PCM). In this installment we'll study wavetables and sample banks.

The PCM standard is used in digital audio, computer music, CD production, and digital telephony. With PCM, the amplitude of the signal is moderated at regular intervals and digitally codified with a variable approximation rate, depending on the number of bits employed.

We also saw in the last article that sampling is the basic element of digitization. And it’s here that the terminology plays a trick on us, hold on tight…

A nice mess

There are two aspects of sampling that are often confusing. The first one concerns the notion of a “sample” itself. Indeed, a signal ─ made up of multiple samples (remember 44.100 samples per second, for a standard CD, for instance) ─ that has been digitized in its entirety, is called…a sample, too! In which cases? When the fully digitized signal is used as an audio source to simulate a real instrument. Collections of these “whole sounds” are referred to as a “sample banks.”

And this is where the second confusing issue arises, because the banks in question must not be confused with “wavetables” (made up of samples, as described above, as well). And this second confusion was fueled by certain marketing people in the '90s calling sample banks “wavetable modules” (like the Wave Blaster daughterboard for Creative Labs cards, for example).

If you’re a bit lost right now, don’t worry we are here to straighten things out for you!

Wavetable Synthesis

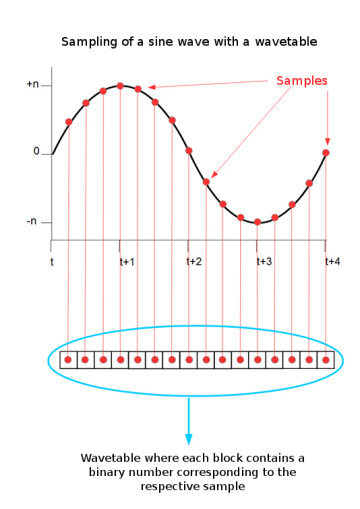

Wavetable synthesis only works in the digital realm. It builds on the fact that a periodic waveform is repetitive by nature. Rather than wasting computing resources demanding the synth/computer determine the sample values of an entire audio signal, wavetable synthesis only needs to determine the sample values for the first cycle of the waveform you want to reproduce. Each sample value is then stored in a “block.” Playing back each of these blocks consecutively is like reading the entire data that constitutes a cycle. To reproduce the cycle endlessly, the system simply needs to read the block list in loops. All these blocks together are called a wavetable.

In the third article of this series, we already saw that the pitch of a periodic waveform depends directly on its frequency, in other words, the number of cycles that the wave is reproduced in a second. When it comes to wavetable synthesis, the most effective method found to modify the pitch of a waveform is to read only a certain number of blocks of the table, thus increasing their speed of reproduction and, consequently, the frequency of the signal…and its pitch! This is the principle behind digital oscillators, DOs, which we already mentioned in article 6 of this series.

The method I just described above corresponds to what is called fixed wavetable synthesis, which means it’s for a single waveform. However, several wavetables, each with a different sampled waveform, can coexist within a single synthesizer. This type of synths can move from one wavetable to another one ─ and thus from a waveform to another one, thanks to a modulation parameter, creating very interesting transition effects! This is called multiple wavetable synthesis.

Sample banks

Sample banks are composed of “whole” sounds, which have been prerecorded and can be loaded to the memory ─ within a hardware module or a computer ─ in order to have a quick access to realistic sounds.

In this case, only certain “key” notes of an instrument are sampled, and an algorithm determines the pitch for the intermediate notes, which can sometimes have a negative impact on the quality of the sound played back. Nowadays, digital storage capacities are such that you can, for example, digitize an entire piano not only with one sample per note but almost one sample per velocity level.

Samplers handle all this in the background while you play, so that you don’t have to worry about anything. For example, if you’re playing a sampled piano and you hit a note, the software inside the hardware module, or your computer, chooses a sample — from the multiple samples that comprise that piano sound — that best matches your playing intentions in terms of such factors as attack or velocity. In addition, lots of samplers allow you to edit scripts to define playback rules ─ if you want it to play back a third above every not you play, for example.

In practice

When I wrote in earlier in this series that sampling came about due only to the need to find a more practical method than additive synthesis, I hinted that the first sampler was the Fairlight CMI, I was simplifying things a bit. In fact, ever since the 1920s, musicians and engineers had been looking for a way to manipulate pre-recorded sounds in more or less real time. Among the instruments that spawn from this, the most famous one is the Mellotron, introduced in the '60s, which used a length of magnetic tape per note and timbre, and was widely adopted by many bands at the time.

But it was certainly Fairlight that first introduced, within the scope of synthesis, the concept of digital sampling as we know it today, implying the possibility to modify the harmonic content of a sound with a simple tap of a light pen on a screen. It opened the door to the E-MU Emulators, to the famous Akai samplers from the S Series and even to modern software samplers like Native Instruments Kontakt or Spectrasonics Omnisphere.

Wavetable synthesis was mainly introduced and used by Wolfgang Palm with the PPG brand and the famous “Wavecomputer” and “Wave 2” synths in the early '80s; and also by Waldorf with the “Microwave” and “Wave” (1993). But there are many manufacturers that use it as an alternative sound production mode, and ─ given its digital nature ─ also within many virtual synths, sometimes even side-by-side with sample-based synthesis.

Thus, as you can see in the screenshot on the left, the first of the three sound generators of the famous Native Instruments Absynth is using a sample, in the form of a wav file, as a component of the sound its producing.

All this brings us to conclude that, thanks to the galloping virtualization of everything, the frontier between synthesis based on basic waveforms and the playback of pre-recorded sound elements had never been so blurry as today.