Throughout history, humans all around the globe have never stopped diversifying the uses of music ─ ritual, recreational, intellectual, etc. ─ and have produced it using their voices and bodies or through the development and practice of musical instruments. Through the 19th century, the basic methods for creating music were the same: Hitting animal skins, rubbing, plucking or hitting strings, and blowing into bone, wooden or metal pipes. Then everything began to change...

In the 20th century, humans started to interest themselves in manipulating the structure of their environment ─ the atomic bomb being a worst-case example and sound synthesis a best-case one! The idea behind the latter is not to reproduce predefined sounds, but rather to create completely unheard-of sounds from scratch, or to modify the structure of a sound to create another sound. Interesting, isn’t it?

Throughout this series of articles, we will try to define, in the simplest possible way, what sound synthesis is. We’ll start by recalling what sound is made of and what are the parameters we can act upon to modify it. We’ll move on to what the basic elements of any synth worthy of the name are, and what is their function, as well as the main types of synthesis available. We will also provide you some practical examples, as well as an historical recap of the evolution of sound synthesis since it first came to be and a brief exposition of the most emblematic and surprising instruments in the field.

But, first things first…

What is sound synthesis?

Sound synthesis is based on the same principle: To act on the basic elements of sound in order to replicate it or go beyond its reality. It’s illustrative to look at its close relative, visual synthesis, which we have all been exposed to, given the importance of cinema and video games. In the case of visual synthesis, images are created or reproduced artificially by working with the basics of optical perception, namely, shape, matter, color and luminosity. The goal could be to try to create the illusion of the most authentic reality or to bend the latter in such a way as to produce the most fantastic results.

In its traditional sense, the basic element of music is the note. In audio synthesis, more important than the notes themselves, which become a sound event like any other, is what they are made of. In ordinary life, a note played by an instrument, a word spoken and a motor running are very different events, which nevertheless excite your sense of hearing. That means they all have a common constituent element that defines their nature. And it’s precisely that element the one we seek to influence with audio synthesis.

But what’s that element?

Good vibes

What is sound anyway?

Sound can be defined in two ways. First, sound is a signal of the environment perceived by your sense of hearing, which ties the mechanical message picked up by the ear to its interpretation by the brain.

This aspect of sound, as important as it is, is not what we will look into in these articles. What interests us here instead is the physical nature of sound.

From a physical point of view, sound is, first and foremost, the creation and propagation of a vibration. To propagate, the vibrations need an elastic medium. What is an elastic medium? Quite simply, an element that can be deformed and come back to its original state after a disturbance. Air and water are both elastic mediums, but wood, glass and concrete (oh, yes!) are, too. In fact, in doesn’t matter what solid, liquid or gaseous material you take, they all can transmit a sound vibration. There’s no place where sound can’t propagate, except in a vacuum, such as outer space: Star Wars’ Death Star could blow up as many planets as there are, but it would only produce absolute silence.

OK, now you know that sound is, above all ─ drum roll ─ vibration! And it’s precisely that basic element that you want to manipulate with sound synthesis.

But to be able to do that, you need to represent and quantify it.

Riding the waves

This is where the soundwave comes into play. The wave is the physical propagation of a vibration in an elastic medium.

Do note, however, that the traveling of the wave does not entail the shifting of the object itself. It’s only the internal molecules of the medium in which the wave is propagating that actually vibrate. Each molecule passes on its vibration to the next particle, without being itself displaced nor exercising motive power on it. Thus, for example, when bathing in the sea, your body follows the up-and-downward vertical movement of the waves, but you are not displaced horizontally by them.

And it’s not for nothing that the word “wave” brings to mind water. Indeed, it was water that first made humans aware of the existence of the phenomenon of vibrations. Besides, one of the most frequently used examples to illustrate the propagation of sound is the throwing of a stone in a pond. The impact of the stone on the water symbolizes the moment of the emission of sound, while the ripples that propagate uniformly all around it represent the wave it has generated.

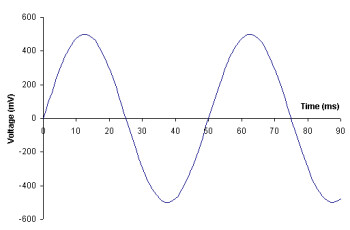

Most often, a waveform is represented with a two-dimensional graphic where the X-line corresponds to time and the Y-line symbolizes the amplitude of the waveform (such as the diagram at left).

In out next installment, we will study the waveform more in detail to better understand how to influence it in order to model sound.