Graphic and stochastic synthesis are not very widely used and, yet, they are quite interesting. Let's see why...

Stochastic synthesis

Stochastic synthesis isn’t really a form of synthesis in itself, rather it’s more like a series of techniques. Think of “stochastic” as a synonym for random. It’s basically a form of synthesis whose goal is to model sounds without a recognizable periodic structure. Does it ring a bell? Indeed, we are talking about noises, which we previously mentioned in article 5 of this series.

And that’s where it all hits one of the fundamental problems of computer modeling: It doesn’t allow you to correctly model random events, since the processes employed are deterministic by their own nature. There’s is nothing random with computing, even if certain bugs might make you think otherwise!

If a computer produces a series of apparently random numbers, this series will necessarily be repeated after a certain number of iterations. The only thing you can do about it is increase the number in the series so as to delay the moment when the series starts over again. That’s why they are sometimes called pseudo-random events. Note that, in certain cases, you can use pretty big wavetables (see article 18) preloaded with pseudo-random data, which only need to be “played back.” Noise generators are used to modulate periodic waves in terms of frequency or amplitude (see article 21).

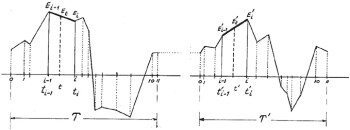

One of these random production modes was introduced by Iannis Xenakis (once again; see article 19) ─ Dynamic Stochastic Synthesis, which was implemented, under his promotion, in the GenDy system developed in the early '90s. In short, the waveform is made up of points linked by segments and each point is calculated according to probability functions based on the previous point.

Graphic synthesis

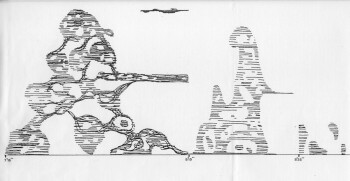

To finish our short tour of weird synthesis forms I have chosen a somewhat unexpected method: The use of an image as data source to be transformed into sound. As surprising as that might seem at first sight, remember that we live in an era where computers are ubiquitous and everything can be digitized! Following this logic, binary data is binary data, regardless of the source. You only need to transform it into sound with a DAC (Digital-to-Analog Converter) and that’s that.

However, translating graphical symbols into sound dates from before the digital era: in 1925 a patent was granted for the photographic notation of musical sounds and, in the decades afterwards, several electronic instruments appeared based on photo-electric sound generators. Orchestra conductor Leopold Stokowski was one of the first champions of these new methods of sound creation. But it was once again Iannis Xenakis who championed them in the digital era, most notably with the UPIC system, conceived together with the French Center for Mathematical and Automated Music Studies (CeMAMu, Centre d’Etudes de Mathématique et Automatique Musicale) in Paris.

This system can detect “curves” on a tablet. These curves symbolize time and pitch at the same time, and they can be modified and moved at will by the operator, who also has the possibility to work directly with audio samples. But it wasn’t until 1991 that computers allowed the UPIC ─ initially created in 1977 ─ to manage data in real time. Among the tools available today to the general public that use images as a source to produce sound is the Harmor, the virtual synth/sampler by Image Line, the creators of FL Studio.

What next?

In the penultimate article I mentioned that this series would come to an end after reviewing several weird sound synthesis forms. But I’ve decided to give you a bonus in the form of two additional articles consecrated to a short selection of rather unique instruments…

Until next time!